Overview

During a file migration to Azure leveraging Azure Files, a File Server, ANF, or any target system that will leverage a Universal Naming Convention (UNC) path, it is often desirable to retain the original UNC path. Typically, Administrators will have to either:

- Update Logon Scripts

- Update GPOs

- Provide manual instructions to users on remapping their Network Drives to the new UNC Path

- Etc…

In any of the above methods, there is often downtime involved. After the file migration has completed and users are ready for the new UNC path, they must wait for the Logon Scripts to complete running and remap the network drive or wait for the GPO to apply. Or in the case of manual instructions, potential for users not following the instructions or something going wrong. Each of these has an inherent risk of failure causing calls to the helpdesk.

There is a more seamless solution. That solution is leveraging Distributed File System (DFS) Namespace’s Root Consolidation feature. This feature allows you to remap a UNC Path to DFS-N which then contains the new target file shares as targets. The scope of this article isn’t to instruct on what DFS-N is as it is a very old technology and there are many articles. See this page for an overview: DFS Namespaces overview | Microsoft Docs.

When using DFS-N, there are two deployment methods for your DFS Namespace Root Servers:

- Domain-based namespace such as \\domain.com\

- Standalone namespace such as \\server\

Domain-based namespace allows you to have multiple target root servers to provide automatic High-Availability for your namespace. Unfortunately, Domain-based namespaces do not provide Root Consolidation. DFS Root Consolidation is taking an old UNC Path and remapping it to your DFS Namespace so the DFS Namespace now governs the old UNC Path. Because Domain-based namespaces do not support DFS Root Consolidation, we will need to leverage a Standalone Namespace. And the method to make Standalone Namespaces Highly Available is a Windows Server Cluster.

Therefore, in this article, I will walk through how to:

- Standing up a Windows Server Cluster in Azure with 2 DFS Namespace Standalone Servers with the following characteristics:

- Windows Server 2022 as the Operating System.

- Joined to our Active Directory Domain

- Two Shared Data Disks: one for the quorum disk and one for DFS Namespace

- Standing up an Azure Load Balancer to Front-End our 2 DFS Namespace Standalone Servers. This is required because Azure does not support Gratuitous ARP for the Windows Cluster Client Access Point.

- Setting up a DFS Namespace to target an Azure Files UNC Path

- Remapping an old Windows File Server’s FQDN to DFS Namespace Namespace and configuring DFS Root Consolidation

If you are following along, you will want to deploy the following as we will not be walking through these:

- An Active Directory Domain Controller (can be deployed in Azure or On-Premises). Be sure to set its Private IP to Static.

- Two Azure Virtual Machines for our DFS Cluster that are joined to your Active Directory Domain. I will walk through creating and attaching the two shared Data Disks. Be sure to set its Private IP to Static.

- Azure Files with a share with some sample data (can simply be a single empty .txt file).

DFS Cluster Preparation – Quorum Disk

You should have 2 Virtual Machines created with no Data Disks. Let’s go ahead and create our first Shared Managed Disk that will become our Quorum Disk. We will create this as a Standard SSD Disk. After our Cluster is built, we’ll create a second Shared Disk as a Premium SSD and add it to the Cluster.

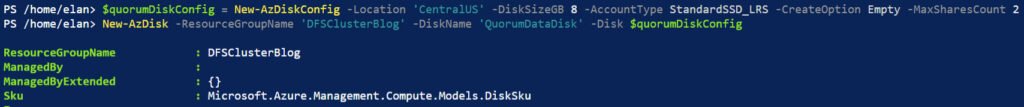

Let’s create our Quorum Disk with the following parameters:

- Same Region as our Virtual Machines. For us, that is CentralUS

- CreateOption as Empty

- MaxSharesCount as 2 as we have 2 DFS Namespace Servers

- DiskSizeGB as 8GB as we will only be leveraging it for Quorum. You can even go lower.

$quorumDiskConfig = New-AzDiskConfig -Location 'CentralUS' -DiskSizeGB 8 -AccountType StandardSSD_LRS -CreateOption Empty -MaxSharesCount 2

New-AzDisk -ResourceGroupName 'DFSClusterBlog' -DiskName 'QuorumDataDisk' -Disk $quorumDiskConfig After creating our disk, you will see the PowerShell object information return back in the PowerShell window.

You can also confirm the disk was created in your Resource Group.

Let’s attach the Quorum Disk to both our Virtual Machines, using the following commands:

$resourceGroup = "DFSClusterBlog"

$vm1 = "DFSClusBlog01"

$vm2 = "DFSClusBlog02"

$quorumDiskName = "QuorumDataDisk"

$vm1 = Get-AzVm -ResourceGroupName $resourceGroup -Name $vm1

$vm2 = Get-AzVm -ResourceGroupName $resourceGroup -Name $vm2

$quorumDisk = Get-AzDisk -ResourceGroupName $resourceGroup -DiskName $quorumDiskName

$vm1addQuorum = Add-AzVMDataDisk -VM $vm1 -Name $quorumDiskName -CreateOption Attach -ManagedDiskId $quorumDisk.Id -Lun 0

$vm2addQuorum = Add-AzVMDataDisk -VM $vm2 -Name $quorumDiskName -CreateOption Attach -ManagedDiskId $quorumDisk.Id -Lun 0

update-AzVm -VM $vm1 -ResourceGroupName $resourceGroup

update-AzVm -VM $vm2 -ResourceGroupName $resourceGroupAfter running the following commands, you should see no errors and responses should show the Shared Data Disk was successfully added to both Virtual Machines.

Go onto both Virtual Machines, open Disk Management, initialize the disk (GPT is fine), partition, and format the disks. We’ll assign the Drive Letter as Q for Quorum. Again, do this on both Servers. Later on, when we set up the Cluster, it will take care of Disk Ownership.

DFS Cluster Setup

Now that we have our Quorum Disk on both DFS Servers, let’s go ahead and set up the Windows Cluster. On both DFS Servers, open Windows PowerShell as Administrator and run the following command:

Install-WindowsFeature -Name Failover-Clustering -IncludeManagementTools

Once installation has completed, hop on your first DFS Server and open Administrative Tools > Failover Cluster Manager.

Add both servers:

Run through Cluster Validation and make sure there are no significant warnings/errors. Then give your Cluster a name 15 characters or less.

Create the cluster and verify the cluster was created successfully.

Verify both nodes are up.

Verify the disk is Online and it is owned by one of the DFS Cluster Nodes.

I double clicked on Cluster Disk 1 that is assigned as the Disk Witness and gave it a new name.

DFS Cluster Preparation – DFS Data Disk

Now that we have our Cluster operational with our Quorum Disk, let’s go ahead and create our second Shared Managed Disk that will become our DFS Data Disk. We will create this as a Premium SSD Disk.

Let’s create our DFS Data Disk with the following parameters:

- Same Region as our Virtual Machines. For us, that is CentralUS

- CreateOption as Empty

- MaxSharesCount as 2 as we have 2 DFS Namespace Servers

- DiskSizeGB as 256GB (P15).

$dfsDiskConfig = New-AzDiskConfig -Location 'CentralUS' -DiskSizeGB 256 -AccountType Premium_LRS -CreateOption Empty -MaxSharesCount 2

New-AzDisk -ResourceGroupName 'DFSClusterBlog' -DiskName 'DFSDataDisk' -Disk $dfsDiskConfigAfter creating our disk, you will see the PowerShell object information return back in the PowerShell window.

You can also confirm the disk was created in your Resource Group.

Let’s attach the Quorum Disk to both our Virtual Machines, using the following commands:

$resourceGroup = "DFSClusterBlog"

$vm1 = "DFSClusBlog01"

$vm2 = "DFSClusBlog02"

$DFSDiskName = "DFSDataDisk"

$vm1 = Get-AzVm -ResourceGroupName $resourceGroup -Name $vm1

$vm2 = Get-AzVm -ResourceGroupName $resourceGroup -Name $vm2

$dfsDataDisk = Get-AzDisk -ResourceGroupName $resourceGroup -DiskName $DFSDiskName

$vm1addDFS = Add-AzVMDataDisk -VM $vm1 -Name $DFSDiskName -CreateOption Attach -ManagedDiskId $dfsDataDisk.Id -Lun 1

$vm2addDFS = Add-AzVMDataDisk -VM $vm2 -Name $DFSDiskName -CreateOption Attach -ManagedDiskId $dfsDataDisk.Id -Lun 1

update-AzVm -VM $vm1 -ResourceGroupName $resourceGroup

update-AzVm -VM $vm2 -ResourceGroupName $resourceGroupAfter running the following commands, you should see no errors and responses should show the Shared Data Disk was successfully added to both Virtual Machines.

Go onto both Virtual Machines, open Disk Management, initialize the disk (GPT is fine), partition, and format the disks. We’ll assign the Drive Letter as N for DFS Namespace. Again, do this on both Servers. Later on, when we add DFS as a role to the Cluster, it will take care of Disk Ownership.

Cluster DFS Role Setup

On both DFS Servers, open Windows PowerShell as Administrator and run the following command:

Install-WindowsFeature "FS-DFS-Namespace", "RSAT-DFS-Mgmt-Con"

Once installation has completed, go onto one of your DFS Cluster Nodes, and open your Cluster Disks and choose to add Disk.

Select the new disk.

The new disk has been added to the Cluster.

Just as we did with the Quorum Disk, let’s double-click the new disk and give it a new name.

Now let’s go ahead and set up DFS in our Cluster. Right-Click Roles and choose Configure Role

Choose DFS Namespace Server

Give your DFS Client Access Point a name 15 characters or less. This will be the UNC Name users connect to if you were not using DFS Root Consolidation.

Select the new Cluster Disk we made available to the Cluster.

Create your first Namespace. You will later see, we will create additional Namespaces, one for each UNC Path in which we want to leverage DFS Root Consolidation. It’s ok if you don’t quite know how DFS Root Consolidation works quite yet. You will gain an understanding for it as you read on.

Go ahead and complete the installation of your DFS Role. We will see it is in a failed state. There are two reasons for this:

- We need to configure a Static IP

- We will need to configure an Azure Load Balancer leveraging the same Private IP Address we configure on our DFS Role’s Client Access Point. This is because Azure does not support Gratitous ARP and thus, we need an Azure Load Balancer to handle the Static Private IP of our DFS Client Access Point, DFSBlog.shudnow.net.

Let’s assign open our DFSBlog Client Access Point, select resources, and select our IP Address.

Double-click on our IP Address, and assign a Static IP Address. This will need to be an available IP Address in your Azure subnet. Again, this IP Address will be the same IP Address for which we will create an Azure Load Balancer for.

Now that we have a Static IP configured for our DFS Role, Start the DFS Role.

Our DFS Role is now running with all resources showing as Online.

In DNS, we can also see the appropriate DNS records have been created.

However, if you try to access \\DFSBlog\, nothing will appear. Again, this is because the Cluster is in Azure which does not support Gratuitous Arp for which we will need an Azure Load Balancer.

Azure Load Balancer Setup

When creating our Load Balancer, make sure to deploy in the same Azure Region as your DFS Cluster. We’ll be creating a Standard Internal Load Balancer.

For the Front End, use the same IP Address that our DFSBlog Client Access Point was configured for.

For our Back End, we will add both our DFS Cluster Nodes.

Our DFS Load Balancing Rule will use HA Ports to allow any ports to flow to the DFS Servers.

The Health Probe configuration will be configured for TCP 135 with Remote Procedure Call (RPC).

Create the Load Balancer resource and once created, in the Load Balancer, navigate to Insights.

Verify our two Backend Servers (our two DFS Cluster nodes) show as Healthy (green checkmarks).

Go back to the same server you previously tried to access \\DFSBlog\ which had failed and retry.

In the existing DFSNamespace, also called DFSNamespace, let’s try adding our target. I have an ADDS-Connected File Share I use for FSLogix in my AVD Lab that I will be using. On one of my DFS Cluster Nodes, let’s open our DFS Management Console which is also under Administrative Tools. We will notice our Namespace automatically shows up.

Let’s create a new folder in our namespace that will point to our FSLogix File Share.

During creation, you will be prompted with the following error. Go ahead and select yes.

Our DFS Namespace now has our Target folder that has our FSLogix File Share as its target.

If we go back to our other server and test going to \\DFSBlog\Target which should see some data.

Bingo! Our DFS Cluster is functional and DFS Targets are working. But, how do I use DFS Namespaces to retain my original UNC Paths after migrating to Azure Files, Azure File Server, or Azure Netapp Files? We’ll need to configure DFS Root Consolidation for that. Read on…

DFS Namespace Root Consolidation

DFS Root Consolidation is a feature that allows you to:

- Remap a Server FQDN (we’re going to use the FQDN OldServer.shudnow.net) to point to the DFS Client Access Point (dfsblog.shudnow.net for us).

- For the DFS Client Access Point, modify the Service Principal Name to contain the old server’s NetBIOS name and FQDN

- Configure a Namespace to match the old server so DFS will accept the old servers NetBIOS name and FQDN as a Namespace.

On each DFS Cluster Node, run the following PowerShell commands as an Administrator and then reboot each server. Do them one by one and monitor the Failover Cluster Manager to make sure the node you are rebooting as operational prior to moving onto the next server.

New-Item -Type Registry -Path "HKLM:SYSTEM\CurrentControlSet\Services\Dfs"

New-Item -Type Registry -Path "HKLM:SYSTEM\CurrentControlSet\Services\Dfs\Parameters"

New-Item -Type Registry -Path "HKLM:SYSTEM\CurrentControlSet\Services\Dfs\Parameters\Replicated"

Set-ItemProperty -Path "HKLM:SYSTEM\CurrentControlSet\Services\Dfs\Parameters\Replicated" -Name "ServerConsolidationRetry" -Value 1

On one of the DFS Cluster Nodes, open the DFS Management Console and create a new Namespace.

Select your DFS Cluster’s Client Access Point as the namespace server.

The Namespace Name will be the NetBIOS name of our old server with a # in front of it. It is important you add the # so DFS knows this Namespace is leveraging Rootspace Consolidation.

Create your Namespace and validate the new Namespace has been created.

In Failover Cluster Manager, we can also see under our DFS Client Access Point our new Namespace has automatically been added to the Cluster.

In the DFS Management Console, let’s add a new folder to our new #oldserver Namespace pointing to the same target we previously used, our FSLogix File Share.

Let’s hop into our DNS Management Console on your Domain Controller, delete your oldserver DNS Record and create a new A Record pointing to the Front End IP of your Azure Load Balancer which is also the IP Address of your DFS Cluster Client Access Point.

Using a Domain Administrator account, run the following commands in an Administrator Command Prompt to delete the Service Principals off the old computer object for OldServer.

setspn -d HOST/oldserver oldserver

setspn -d HOST/oldserver.shudnow.net oldserver

And run the following commands to add the Service Principals to the DFS Client Access Point:

setspn -a HOST/oldserver DFSBlog

setspn -a HOST/oldserver.shudnow.net DFSBlog

In Active Directory Users and Computers, as long as View > Advanced Features is turned on, open the DFSBlog Client Access Point Computer Object, go to Attribute Editor, servicePrincipalName, and verify the two new Service Principal Names have been added.

Now let’s try going to the \\oldserver\target UNC Path and we should see our Azure File Share data.

Conclusion

As you can see, DFS Root Consolidation can be a handy tool to leverage if you require, or desire, to keep your old UNC Paths around as you migrate your data to Azure. If you have any questions, feel free to add a comment.

Leave a Reply